Here we are, at the end of ICM! How time flies.

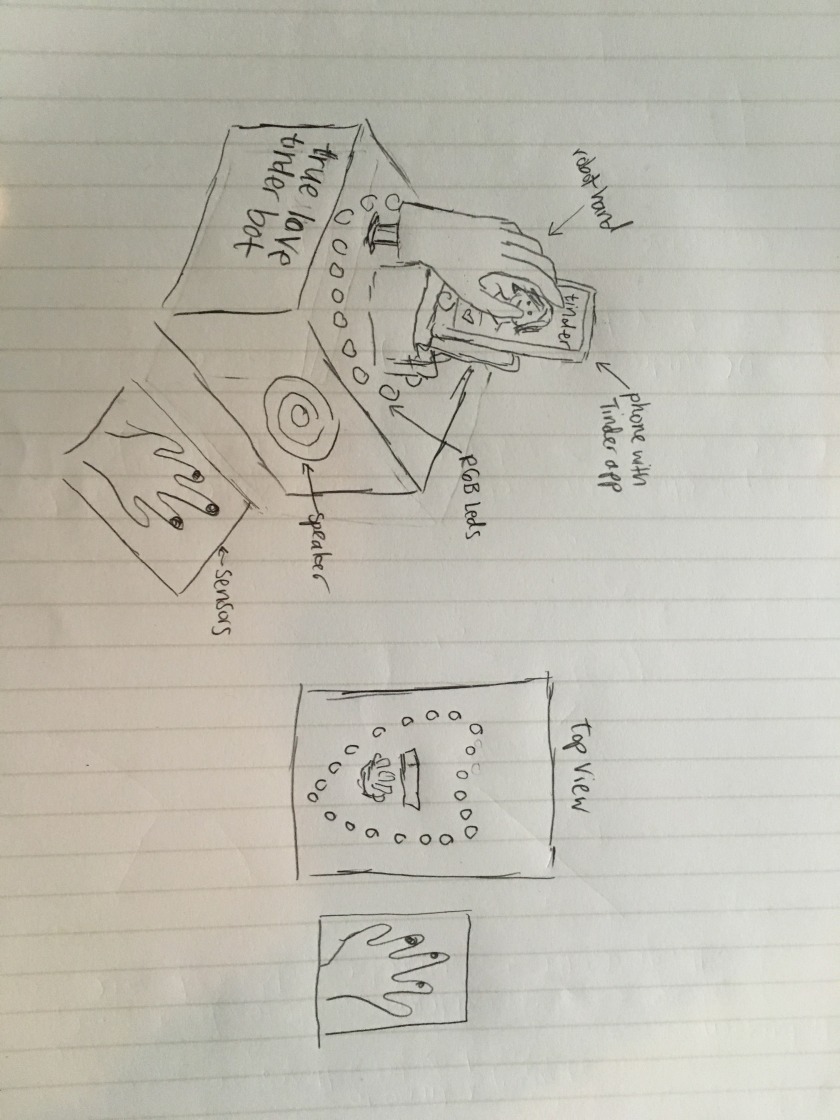

This is the first prototype of my ICM/pcomp final project, the True Love Tinder Robot. The fabrication and wiring is not quite done yet, but this being a post about my ICM documentation, the code is more complete, and that’s the part I’ll focus on here.

Here’s how it works:

You put the phone down in front of the robot hand with Tinder open. Then you put your hands on the sensors. The robot will begin talking, telling you that you have a few seconds to look carefully at each Tinder profile. As you’re looking, the robot will reading your heart’s desire through the sensors, and then swipe on your phone for you*, all the while announcing what it’s doing.

*(okay the physical swiping part isn’t done, but will be next week!)

Now, about the code:

One of the biggest coding challenges in this project for me was working with counting and timing. A number of things happen in the project that depend on time: how long it takes to get a reading, how long you have before the robot swipes, how much time can pass with no sensor reading to determine that the user has left and that the next user should start from the beginning of the sequence. In Arduino, the only built-in timer is millis(), which counts the milliseconds from when the program launched. Therefore there’s no simple way to say, “when this event happens, do something for five seconds, and then do something else for three seconds, and then start over.”

I did a few things to solve this problem. The first was that I turned my series of convoluted if statements into a finite state machine, which made it much easier to organize.

//state machine #define waiting 1 #define checkIfNewUser 2 #define newUser 3 #define giveIntro 4 #define beginSwiping 5 #define calculate 6 #define changeHasSwipedLeft 7 #define changeHasSwipedRight 8 #define swipeLeft 9 #define swipeRight 10 #define getNoSensorTime 11 #define ending 12

Another was saving a millis() value when something crucial happened, and then taking the difference between that and the current millis() to act as a timer.

static unsigned long ts; timePassed = (millis() / 1000) - ts; //the amount of time passed is the current time minus the last time saved

And I used boolean flags to mark when an event happened.

case changeHasSwipedLeft:

hasSwipedLeft = false;

state = swipeLeft;

break;

case swipeLeft:

hasSwiped = true;

if (timePassed >= 6 && hasSwipedLeft == false) {

emic.speak(swipeLeftWords[iLeft]);

iLeft++;

hasSwipedLeft = true;

handServo.write(servoLeft);

digitalWrite(swipeLeftRedLed, HIGH);

digitalWrite(swipeRightGreenLed, LOW);

}

if (timePassed >= 9) {

state = beginSwiping;

}

break;

Another thing I thought about a lot was the personality of the robot. It was important to me that the robot speaks to you, both to explain what’s going on, but also to add to the experience. I was inspired by the character GLaDOS from the videogame Portal, who is an AI that’s passive-aggressive, dark, and very funny. The lines I wrote for the robot were an attempt to give a similar sense of character, even for a short interaction.

//platform.instagram.com/en_US/embeds.js

Overall, I’m happy with the way this is turning out. The physical part is now finished yet, but I’m glad that the code is doing what I want it to. I learned a lot during the process, not just about specific coding techniques, but also about how to find and use libraries, upload on Github, and generally Google around for answers to my many questions.

My code is on Github.