This week in pcomp we got our hands dirty with circuits and components.

I spent a good amount of time getting to know the multimeter, learning how to measure resistance, voltage and current. One of the hardest parts about it was holding the tiny components, measuring them with the multimeter and trying to take pictures at the same time!

I also started building basic circuits with switches and LEDs.

To do the exercise with the potentiometer, I realized I had to solder wires on to the sensor, so I also learned to solder for the first time. It definitely wasn’t the prettiest solder-job in the world, but it worked.

Applying what we learned in the labs, our assignment was to make a switch. We were encouraged to be creative — “A switch is nothing more than a mechanism to bring two pieces of conductive material together and separate them.”

I decided to make a game.

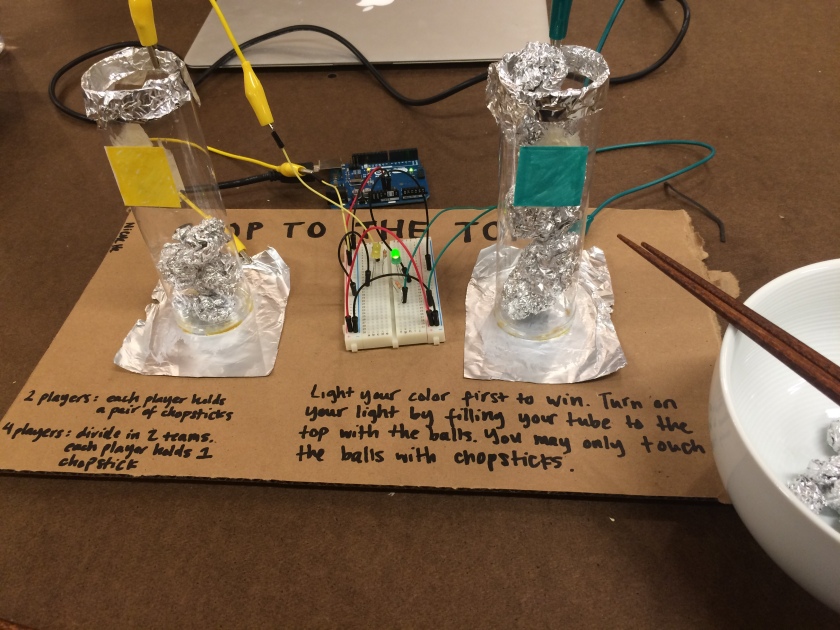

I wanted to do something that tested people’s ability to use chopsticks. I decided to make something that would have two conductive parts with a wide space in between them. The pieces would be connected when people moved other conductive things together in between them so that the circuit could be completed, at which point a light would turn on.

In order to encourage people to use chopsticks to pick things up rather than push things around, I had to make the space between the two conductive points vertical rather than horizontal on the table. Aluminum foil—cheap and abundant—was my material of choice for conducting electricity. So I made a bunch of balls of aluminum foil of different sizes as the things people had to pick up.

The tubes I used were originally pen holders that I had cut the bottoms out of, glueing foil at the bottom and the top. I had to test if a bunch of foil balls stacked on top of each other would be conductive enough to connect the bottom to the top, so I first used the multimeter, and then wired up a circuit that made an LED light up.

In order to make this game a race between two teams, I had two tubes and wired up two switches and LEDs of different colors in parallel on my breadboard. So now there’s a green team and a yellow team.

I’m not 100% sure the schematic I drew is accurate, mostly because I’m not sure if the line in the middle is correct. But I think it is?

It started to come together, and I wrote the rules:

Light your color first to win. Turn on your light by filling your tube to the top with the balls. You may only touch the balls with chopsticks.

(2 players: each player holds a pair of chopsticks)

(4 players: divide in 2 teams, each player holds 1 chopstick)

A silly game deserves a silly name, and thus I call it CHOP TO THE TOP!!!

And voila. I had Aaron (yellow) and Sam (green) play the game to demo. The LEDs are tiny so they’re a little hard to see, but it works!